A Changing Landscape

In 2011, I was interviewed for a programming job at a local company and, as part of the interview, I was asked to provide some samples of my coding. I brought a couple of significant examples I was happy with and let the interviewer look over them. He immediately asked me to walk him through the code line-by-line and I knew that he wanted to make sure I’d written it myself. I explained it to him and got the job.

That was long before we had AI systems that could write fully-formed code on request. Within a month of ChatGPT hitting the public consciousness, I was seeing prominent coding instructors on YouTube advocating the use of the system to increase productivity, with cautions about its accuracy, and more videos from people boasting about “creating” entire applications with it by copying and pasting code from ChatGPT into an IDE. One game developer used it to generate a list of steps for creating a Flappy Bird clone and then a complete set of code. As various features failed to work, he was able to point out the errors to the AI and it would give him corrected code.

The next step was predictable; many people who wanted to learn coding started asking if they should bother given the ability of AI to generate any code needed. That question and other questions about the future of programmers in general became a daily feature on Reddit to the point that at least one user speculated the questions might actually come from trolls who were trying to rile up the professional programmers in the group.

Aspiring programmers who are only after a paycheck will probably be the first to be discouraged by AI prominence and will switch to other fields. Some companies are already hiring highly-paid “AI whisperers” who supposedly specialize in getting the best results out of the new systems. I suspect this might not last long as it’s counter-productive for a system that’s supposed to remove the barriers to information gathering and creativity by responding to natural language prompts.

For the programmers who remain, AI will become a valuable tool that will be used, and sometimes over-used, to increase productivity. Companies will eagerly adopt AI code generation and might even rely on it more and more until they realize that their programmers can no longer support the code they’ve “written”. This is simply a natural trend; individuals and companies try to get as much as they can at the lowest cost until they reach that point where the obvious sacrifices in quality outweigh any savings they’ve achieved.

I’m not condemning AI as a productivity tool. It can be an improvement over having to search through endless StackOverflow posts and Google dead ends for that one answer that applies to my situation. Still, ChatGPT can only tell you what it’s learned from the internet and it still gives wrong answers too often. When it’s wrong, it’s usually confidently wrong, just like the internet it was trained from.

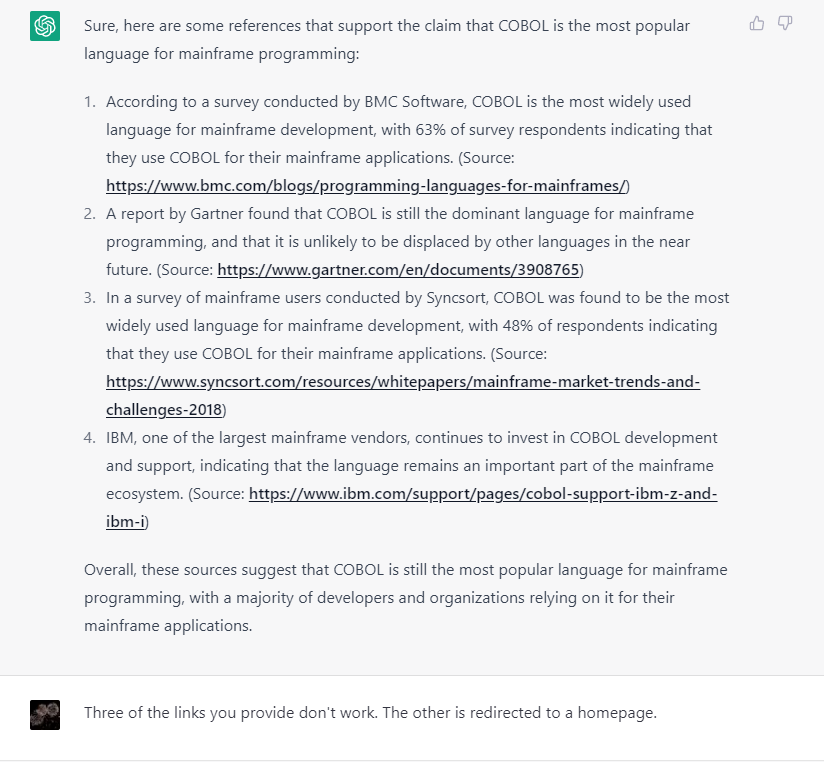

As programmer Tim Corey noted in one of his recent videos, there’s another limitation that compounds this problem and it’s one I saw almost immediately in my conversations with ChatGPT. AI gives you a conclusion based on the information it’s been fed, Google searches actually provide you with the authority and sources upon which you can draw a conclusion. I actually tried questioning ChatGPT on its sources and it couldn’t name them. It’s since been updated and tries to provide sources but has a habit of providing bad links to 404 pages. Google’s Bard couldn’t provide sources, either, although the new Bing AI explicitly provides reference sites as part of its answer.

Where do we go from here?

I predicted earlier that companies will try to get what they can from AI with mixed results. No doubt the systems will improve as the tech companies race to release new versions and abilities. I believe there will always be an inherent limitation in a system that has no volition or ability to truly understand motivations or a personal stake in the answers that it provides. These are not truly intelligent systems; they are language models, admittedly impressive ones, that have the potential to be used as a crutch for professionals, entrepreneurs and students who want to get by with the minimum effort.

That’s where we are, though. The genie is out of the bottle and it’s not going back. There might be attempts at legislation or self-policing on the part of the industry but those have always been leaky solutions. This thing will take its course.

My perspective on this is a personal one; it’s about what it means to be a programmer. Programming is a very different field than it was when I started doing it in the 90s. Even before AI, the tools had advanced to make coding much easier, Google has made answers available with a simple query and programming talent has become commoditized to the point where any aspiring programmers are motivated by the promise of a large salary. I’ve benefitted from all of these things.

There is one standard that I know I have to maintain in order for the activity to have real meaning for me and that is that I take complete responsibility for every line of code that I put under my name. That means understanding the code and the concepts behind it and, if possible, writing it myself because I am the one deploying that code, collecting the paycheck and claiming the expertise. I’m glad for the references, the discussion areas and even an AI assistant but ultimately, the code is my responsibility. As long as I call myself a programmer, nobody else can do it for me. If I lose that, I might as well put down the tools and find something else I can take ownership of.

That’s why I am declaring my work as my own with the ByHumans logo you see here. For the past couple of months, I’ve been working on the Rogue C# series of tutorials and I can proudly say that the code in the project is my own. I will ask ChatGPT general questions on how to do something but then I write the specific project code myself, sometimes using its ideas and sometimes not. The idea of copying and pasting code from it would simply take the fun out of the project.

I’d be glad to see others make similar declarations for their own code because, ultimately, that’s how the questions of responsibility and even copyright that surround AI-generated works will be resolved. It’s up to creators to reject the crutch, in whatever form it’s offered, and take ownership of their own work and for consumers to understand the continuing value of work that results from Human Intelligence.